For years, a giant mystery confounded the world of medicine. How do proteins fold? The answer, elusive, held the key to life itself.

Then, a heroic AI agent – AlphaFold, emerged from DeepMind’s depths.

It tackled the giant. And won.

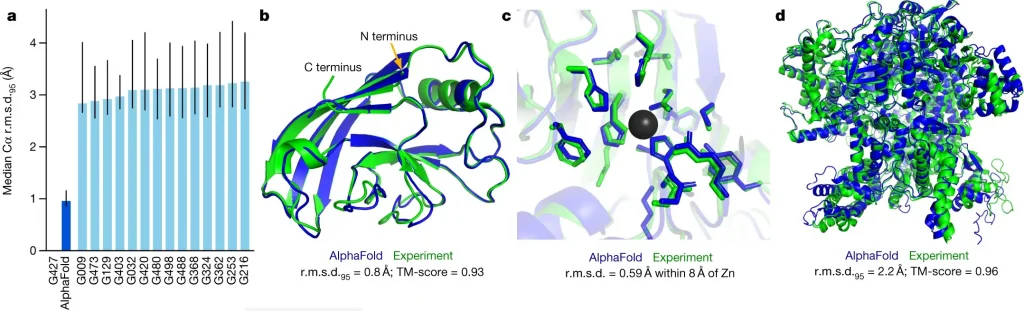

AlphaFold produces highly accurate protein structures

The implications? Beyond staggering. AlphaFold is just the beginning. The journey from niche to pervasive AI use has started. The power of one AI agent is clear. The potential of many working in sync is vast. This is our next frontier—the orchestration of AI agents.

As AI assistants become ubiquitous, let us explore how to unite these forces for humanity’s benefit.

Defining AI Assistants and Agents

AI assistants and agents are the backbone of a technological paradigm shift.

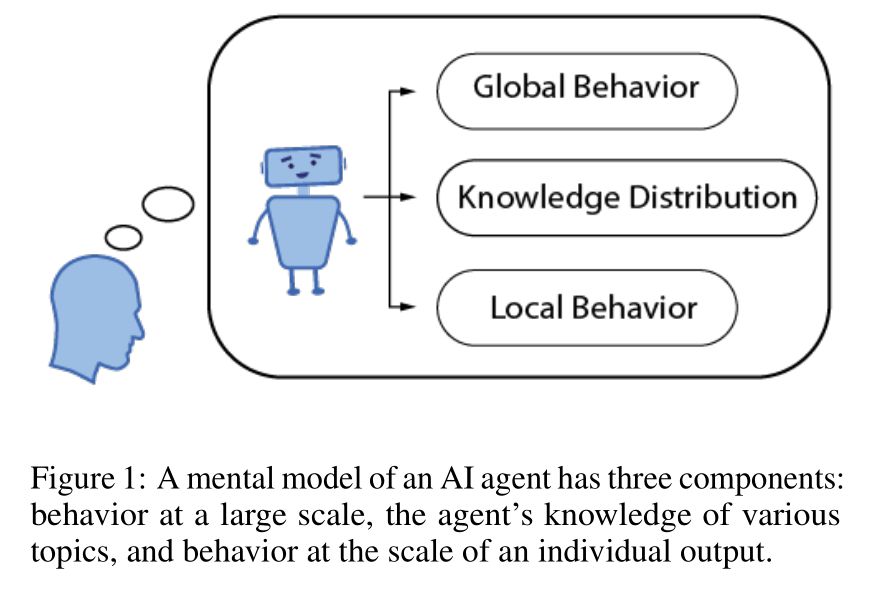

The term “agents” encompasses a broad spectrum of autonomy and capabilities. From simple, task-specific algorithms to complex systems capable of navigating real-world problems, agents represent a dynamic field with evolving definitions.

Like interns in a company, they learn, adapt, and execute tasks. But their realm is digital. They operate under oversight, mastering specific duties. These tasks vary, from data analysis to customer service, like an intern’s role evolves over time.

AI agents come in two flavors – interactive and batch. Interactive agents engage in real-time, like a chatbot on a website. Batch agents work behind the scenes, processing data in large chunks. Each has its place, serving different but complementary roles.

To bridge the gap between human expectations and digital execution, building trust is crucial. Just as we rely on an intern’s precision and dependability, we similarly anticipate these qualities from our digital counterparts. Missteps, such as an AI misinterpreting instructions, can range from minor annoyances to grave mistakes, especially in critical domains like healthcare or finance. Therefore, ensuring these agents are both trustworthy and verifiable becomes indispensable.

Lastly, AI agents thrive on domain knowledge. They are designed with specific goals in mind. Embedding this knowledge means an AI tailored for finance inherently understands market terms. Our role as humans is to give these digital entities a focused lens through which to view their tasks.

Why AI Assistants Must Be Task Specific

Task specificity is not just a feature—it is a necessity.

For AI assistants to be adopted, they must excel in specific, well-defined tasks. This specificity ensures they’re not just feasible but cost-effective. Think of it as hiring an expert for a job. You wouldn’t hire a plumber to fix a computer, right? The same goes for AI.

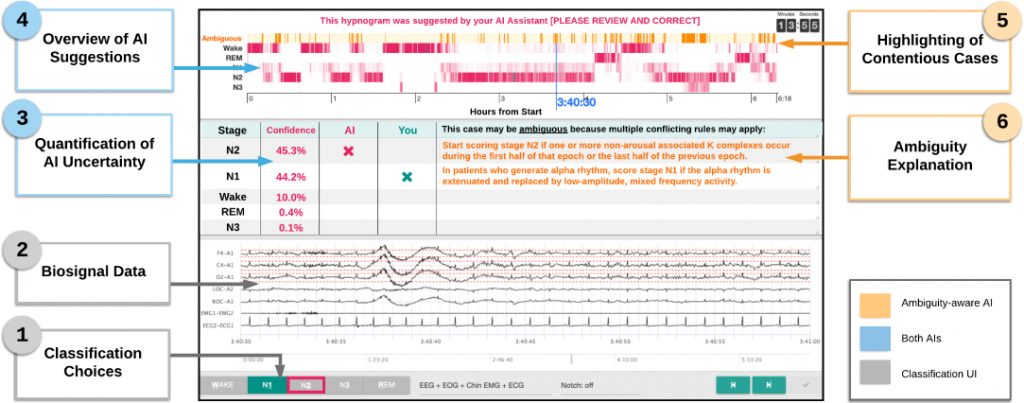

Ambiguity-aware AI assistants in medical data analysis

Performance thresholds are crucial for adoption. An AI assistant must meet high standards in accuracy, timing, auditability, and completeness. If it falls short, trust erodes. Imagine relying on an assistant for financial advice that’s often wrong or slow. It would be costly. Thus, crossing these thresholds is not optional.

But it’s not all about the numbers. The role of content and aesthetics matters too. Even the most accurate agents can be overlooked if its interface is clunky or its outputs are incomprehensible. In today’s world, where user experience is king, an AI’s design and presentation can make or break its adoption.

High-value tasks demand deep domain knowledge. Consider a medical AI assistant. To be effective, it must grasp the hospital’s ecosystem. This complexity and context-dependence mean that AI developers must embed significant domain knowledge into their creations. Without it, an AI assistant’s utility in specialized fields is limited.

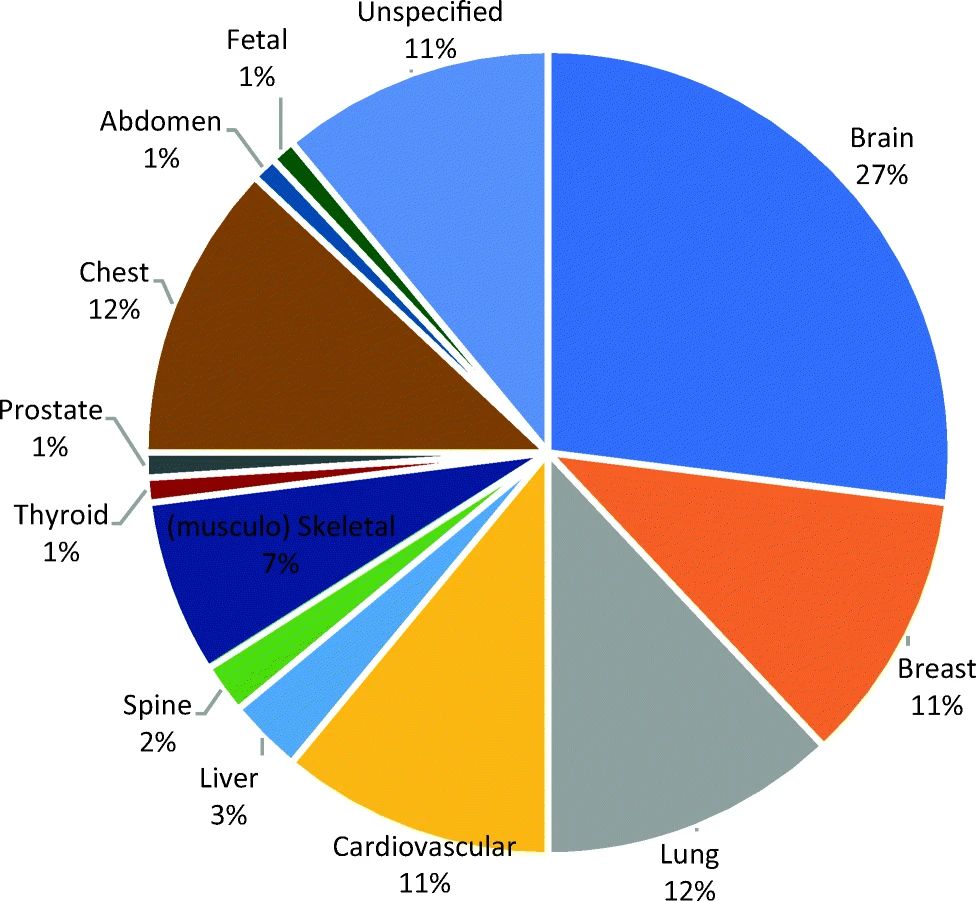

Certain systems, like diagnosis in healthcare, exemplify this complexity. Diagnosis is nuanced, requiring a deep understanding of medicine, patient history, and current research. A generalized medical assistant cannot be expected to excel in such a specialized area. The field of diagnosis needs a specialized assistant that’s finely tuned to its intricacies.

The share of AI applications focusing on a specific anatomic region in diagnostic radiology

This specificity underscores a larger truth: we can’t rely solely on generalized AI assistants. The demands of real-world applications often necessitate the use of multiple specialized assistants. Each must be expertly designed to tackle particular tasks. But having many specialized agents introduces a new challenge – orchestration.

Organizations must skillfully orchestrate these various assistants to ensure they work in concert, not at cross-purposes. This orchestration is key to unlocking the full potential of AI in complex, real-world scenarios.

Why AI Assistants require Multi-Level orchestration

Imagine you are at a bustling airport. Planes are taking off, landing, and parking.

Ground crews are in constant motion, and air traffic controllers orchestrate it all from their towers. This complex dance is a lot like managing multiple AI assistants in today’s business world.

Just as air traffic controllers ensure that planes move smoothly and safely, orchestrating AI assistants ensures that business processes run efficiently and effectively.

But why is this orchestration needed, and how does it work?

Sample framework for Autonomous AI Agents

Think of Multi-Level Orchestration as getting each musician (or AI assistant) to play a part in creating a harmonious symphony. But instead of music, we are creating streamlined business processes.

The Need for Multi-Level Orchestration

Businesses today are like intricate machines, with countless gears and cogs working together. Each AI assistant is a cog in this machine, designed to perform specific tasks.

However, these cogs can’t operate in isolation for the machine to work. They need to be synchronized. That’s where multi-level orchestration comes in. It ensures that each AI assistant works in concert with others, reflecting the complex workflows of real-life businesses.

The perks? Efficiency skyrockets. Data management becomes a breeze. Complex tasks are handled with a coordinated effort that seems almost effortless.

Imagine a marketing team where one AI assistant analyzes customer data, another crafts personalized emails, and a third schedules these emails. Multi-level orchestration ensures they work together seamlessly, maximizing impact.

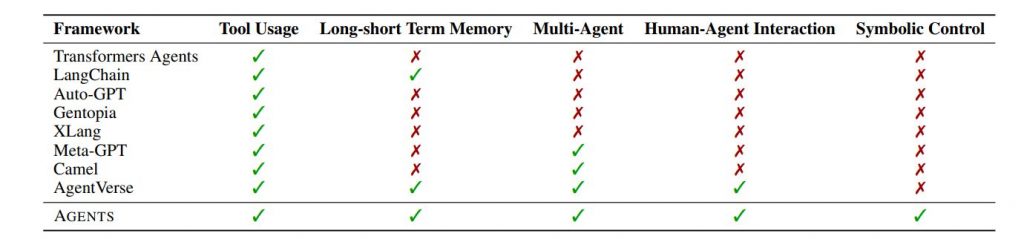

Comparison of Language Agent Frameworks

Inter-agent orchestration refers to the coordination between different AI agents. In a system with multiple agents, each with their own capabilities and tasks, inter-agent orchestration ensures that these agents work together effectively.

This involves communication and collaboration between agents, each contributing unique skills and knowledge towards achieving shared goals.

For example, in a warehouse scenario, one robot might be responsible for identifying and categorizing packages, another for moving the packages, and yet another for optimizing the routes that the robots take. Inter-agent orchestration ensures that these robots work together as a team to manage the warehouse.

Intra-agent orchestration refers to the coordination within a single AI agent. This involves managing the different components or modules of an agent to ensure that it functions effectively.

For example, an AI agent might have a profile module that encapsulates its attributes, a memory module that stores its experiences and knowledge, a planner that strategizes and determines actions, and an executor that carries out these actions.

Intra-agent orchestration ensures that these modules work together seamlessly, enabling the agent to understand instructions, plan, and reason, acquire knowledge, interact with its environment, and adapt based on feedback.

Reliability Engineering: Ensuring AI Assistants are (Mostly) Well-behaved

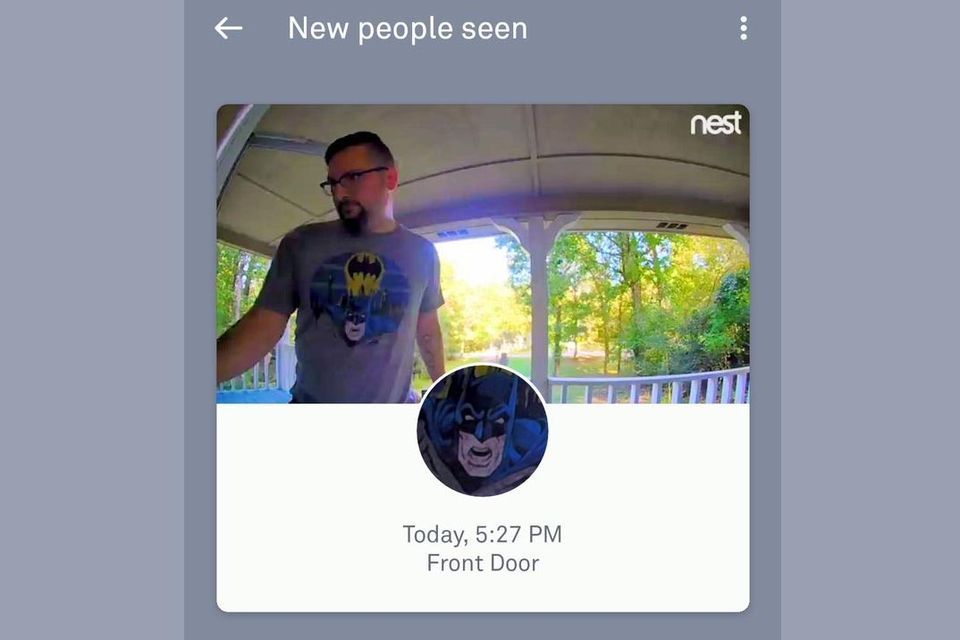

Georgia resident B.J. May was at the receiving end of a classic AI error one fine morning. He couldn’t get into his house because the “smart” facial recognition lock on his door thought he was – Batman. True story.

It underscores the adage in AI and machine learning circles – it’s a matter of “when, not if” mistakes will occur.

Automating complex tasks and orchestrating multiple AI agents to work together magnifies the potential for errors. Expecting perfection in such a complex, dynamic environment is just unrealistic. Instead, the focus should be on building resilient systems, ready to handle mistakes gracefully.

Designing a safer AI system pivots on implementing robust safety nets. Accuracy ensures AI performs tasks correctly within the confines set by policy layers. It is crucial where small errors could have large impacts. Systems must deliver the right outcomes, consistently. Auditability allows us to trace AI decisions. It offers a clear record of actions for review against ethical standards and legal requirements. This transparency builds trust. Users and developers can see and understand AI actions.

At the heart of these safeguards is the policy layer, a concept similar to human etiquette but for AI. This layer acts as a filter, sitting atop the AI’s core, scrutinizing its outputs before they reach the user.

It is a critical checkpoint, ensuring that the AI behaves in ways that align with our ethical standards and societal norms.

For instance, to prevent an AI trained on human speech from adopting offensive language, a policy layer could block profanity, ensuring the AI’s outputs remain appropriate. This mechanism reflects a broader principle – AI, like humans, needs guidance to navigate social interactions without causing offense.

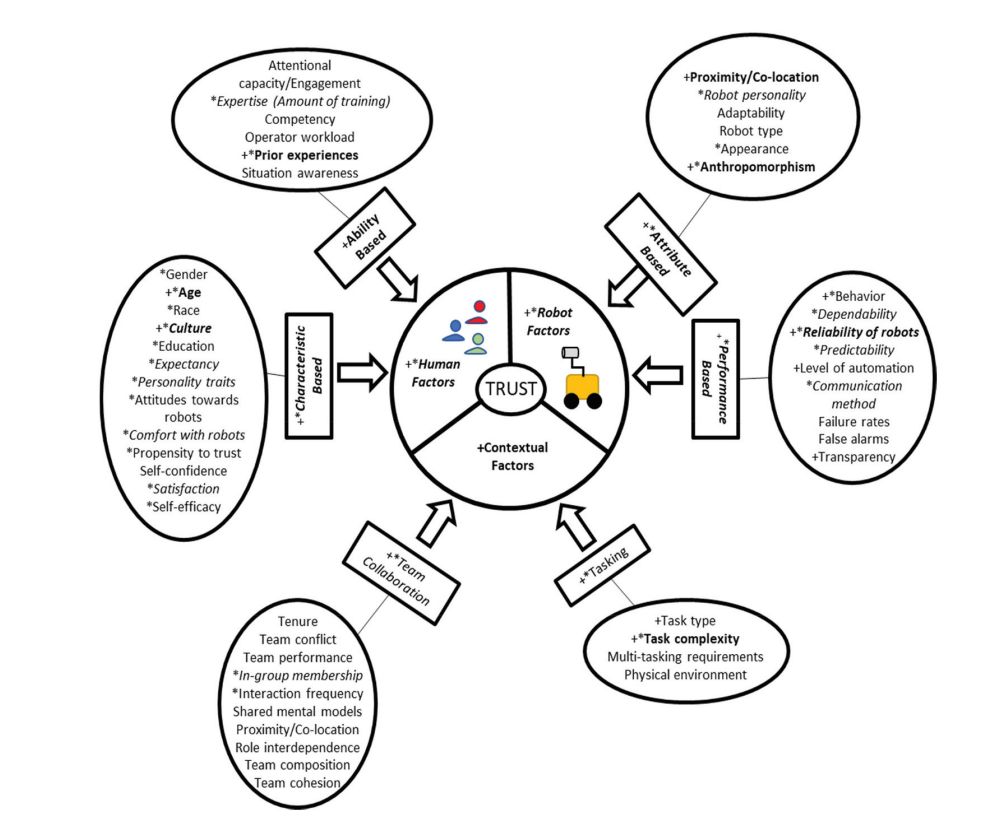

Elaborated model of human-robot trust

Beyond etiquette, ensuring AI reliability involves more sophisticated methods, such as reliability engineering and incorporating humans-in-the-loop. These approaches involve constant monitoring and intervention, where humans play a crucial role in vetting AI decisions before they’re finalized.

However, this method hinges on the vigilance of these human overseers, who must remain critical and skeptical to prevent oversight. Designing AI with these safety nets—policy layers, human oversight, and engineered reliability—requires asking key questions.

Does the policy layer effectively mediate between the AI and the user?

Can the system components be independently updated without causing disruptions?

Is the policy layer adaptable to swiftly address unforeseen issues?

Answering ‘yes’ ensures a foundation for deploying AI systems that are not only effective but also respectful and safe.

Approaches to Designing Good AI Assistants

- Policy Layers: Implementing logic layers to filter and adjust AI outputs, ensuring they align with societal norms and business ethics.

- Reliability Engineering: Developing sophisticated monitoring systems and safety codes to anticipate and correct AI errors.

- Humans-in-the-Loop: Incorporating human oversight into the AI output approval process, adding a layer of critical evaluation to catch and correct potential mistakes.

- Pre-flight Checks: Regularly assessing the integration and functionality of policy layers and their adaptability to changes in the AI system or its environment.

- Accuracy Assurance: Developing AI assistants with a high threshold for precision in task execution and adherence to ethical guidelines, ensuring they act within the expected realms of operation.

- Auditability Frameworks: Establishing transparent procedures for tracking and reviewing agent decisions, fostering a culture of accountability and continuous improvement.

How AI Assistants Are Transforming Our World

Let’s briefly look at various sectors where AI assistants/agents have made a significant impact. Here is a list of key applications:

- Virtual Personal Assistants: AI assistants such as Siri, Alexa, and Google Assistant help users with tasks like setting reminders, playing music, and providing information.

- E-commerce Recommendation Systems: AI agents power recommendation engines on platforms like Amazon and Netflix, suggesting products and content based on user behavior.

- Fraud Detection: In financial institutions, AI agents are used to detect and prevent fraudulent transactions by analyzing transaction patterns and anomalies.

- Autonomous Vehicles: AI agents are integral to the development of self-driving cars, using sensors and real-time data for navigation and decision-making.

- Customer Service Chatbots: AI-driven chatbots provide customer support by answering queries, resolving issues, and offering personalized assistance.

- Healthcare Diagnostics: AI agents assist healthcare professionals with diagnoses and treatment plans, and in some cases, they perform better than human doctors in medical exams.

- Robotics: AI-powered robotic assistants are used for tasks such as carrying goods in hospitals, cleaning, and inventory management.

- Human Resource Management: AI assists in the hiring process by scanning job candidates’ profiles and aiding in blind hiring to reduce biases.

- Finance and Banking: AI models are used for credit decisions, risk management, quantitative trading, and providing personalized financial advice through chatbots.

- Government Services: AI agents improve public services in healthcare, education, and transportation by optimizing processes and enhancing decision-making.

- Environmental Protection: AI agents are being used to develop technologies for reducing pollution and protecting the environment.

Conclusion

We stand at the threshold of a new era for AI assistants and agents.

With OpenAI’s GPTs, the promise of a 7-trillion-dollar AI new world order and a projected 45% CAGR for the autonomous AI agents market – the writing is on the wall.

Imagine a future where every sector, every enterprise, thrives under the watchful guidance of AI. Where challenges are met with swift, precise solutions, tailored by the collective intelligence of an army of specialized AI assistants. Where efficiency is not just a goal, it is the baseline.

With such a confluence of technology and purpose, we may be able to realize our wildest hopes for the future.