Despite $200+ billion spent on ML tools, data science teams still struggle to productionize their data and ML models. We decided to do a deep dive and find out why.

Back in 1991, former US Air Force pilot and noted strategist John Boyd called for U.S. Military reforms after Operation Desert Storm. He noted that there were three basic elements that were necessary to win wars – “People, ideas, machines — in that order!”

The three elements to win wars, according to John Boyd

That order remains true even today, and not just in the context of military thinking but in business decision-making as well. Boyd knew that an existing technology alone cannot be responsible for the success of new technology; rather, it needs the alignment plus intersection of technology and the people, their ideas, as well as the organizational / business context. Our fascination with technology often leads us to solutions that are very good in theory like the F-111 or F-35, but not quite as effective on the battlefield, at least not like the F-16, which is still considered one of the most versatile fighter aircraft of all time.

The rules aren’t any different for data science

The past decade has seen data becoming the competitive differentiator for almost every industry, be it retail, real estate, finance, education, healthcare, entertainment, manufacturing, and more. This is partly due to data and technology becoming more accessible. As a result, companies have started investing in data infrastructure with the goal of maximizing ROI on data science spend, building flexible data operations, and accelerating innovation. So much so, that they spend over $200 billion on Machine Learning and Big Data tools, or what the industry calls the Modern Data Stack, which is an assembly of these cloud-native tools that reflect how data should flow through an organization for it to be useful, at scale and by multiple users.

However, according to Gartner, despite all these investments in maximizing ROI on ML spends, and only 53% of projects make it from prototypes to production.

Despite all the investment – money, time, resources, and valuable data science bandwidth– why is this number so low? We spoke to a number of practitioners and end consumers of data science (we’re refraining from calling this a survey because of the small sample size and the anecdotal approach to these conversations), and came back with a number of observations:

-

- Data science is getting siloed. Not only is data science today not actively involved in the business of moving business outcomes, but some data scientists don’t even see it as their job. As a result, the models are irrelevant to the business by the time they’re production-ready. What’s ironic is that the modern data stack, whose goal is to help organizations save time, effort, and money through better utilization and integration of data tools, is creating bottlenecks in the consumption of data.

- Data science teams are building technology instead of solving problems. We see many data science teams drive up solution complexity with their desire to emulate Big Tech. They see themselves as mini-Ubers, and reusing methods that are not fit for context. As a result, they end up spending a lot of time on building technology, which comes a little too late.

- Data science is missing ideas/strategies that allow it to be fit for context across the diversity of enterprises and objectives – small and large, high and low skill, accuracy vs cost, and other standard business considerations.

Understanding and fixing these is critical to the data science function’s impact.

Business is Data Science’s Business

Before there was big data, it was generally accepted that different departments would create and manage their own data, and use it in meaningful ways to achieve their goals. However, as the complexity of data increased, we started seeing new big data tools emerging which needed specialized skills. Eventually, this meant data science becoming increasingly disconnected from the business functions or their day-to-day use case requirements.

Beyond the tools, the cultural disconnect between data science teams and business has only grown. Not only are the skills of the staff involved different, but their approaches are remarkably different. While the business teams are focused on the day to day operations and identifying ways to improve processes to improve efficiency, profitability, and more, the data science teams typically have a more R&D flavor in their approach.

We witnessed conflict between the business and data science teams around purpose, process, and outcomes in a number of organizations. This is not just a waste of time and resources but is also detrimental to the future of these organizations.

Data science is a core business function, and teams need to be accountable for driving organizational and business outcomes. Once tied to the results, the success of this function will ensure a much higher success rate for all data science initiatives.

Data Science Teams’ Choices Are Driving Up Complexity

Shiny object syndrome or “SOS” is a core part of human nature, where we tend to focus on what’s the latest and greatest – the latest tools and technology, being ahead of the curve in the adoption of the modern data stack. All the while disregarding how useful it may actually be.

Unfortunately, the hierarchy proposed by John Boyd has been flipped on its head by most organizations, with technology taking precedence over ideas, and finally people. As a result, they tend to adopt new tools without thinking about the consequences, or how they would fit into their context. This becomes the single biggest roadblock. All the time goes towards experimenting with and maintaining complex technologies. There is little time spent in understanding impact, or exploring alternative approaches.

There is a Gap in Ideas

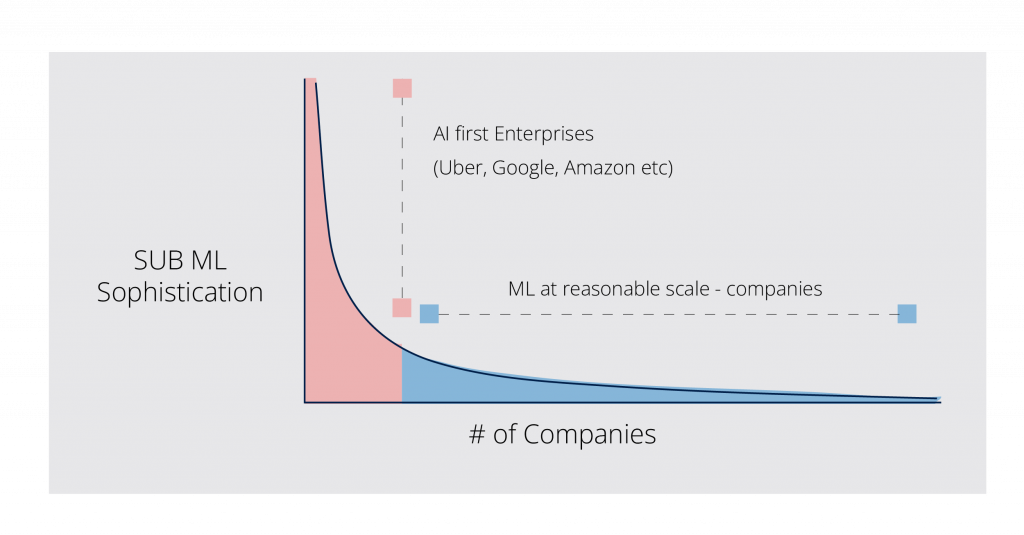

According to noted data scientist, Mihail Eric, there are still a lot of ‘ML at reasonable scale’ or Sub-ML companies. He notes, “These companies stand to get their first wins with ML and generally have pretty low-hanging fruit to get those wins. They don’t even necessarily require these super-advanced sub-millisecond latency hyper-real-time pieces of infrastructure to start leveling up their machine learning.”

Long-tail distribution of “ML at reasonable scale” companies (Source: Mihail Eric)

If we were to go back to the military analogy, think about the US Air Force and how they still use aircrafts such as F-16s – aircraft that aren’t the latest and greatest, but serve the purpose better for most requirements. One of the key reasons being that these were aircraft that were designed keeping the needs of the user (pilot) first.

Building an ML model isn’t enough, it needs to deliver in the context of the end-user. Similarly, the decision regarding the tooling also needs to consider the requirements of the business and the end-users.

(Fun fact: John Boyd’s ideas had an impact on the design and acquisition of other major weapons systems including the A10 Warthog and Bradley Fighting Vehicle, and also on Operation Desert Storm)

Strategy First, Technology Later

Every organization today is turning into a data company, but with data increasing in volume, depth, and accessibility across organizations — it has become the key to a sustainable competitive advantage. However, more often than not, big data is not used well. Organizations are better at collecting data – product usage, customer data, competitor data, etc than designing the strategy around its usage. This brings us to another key element that Boyd spoke about – ideas.

Data science being as dynamic as it is, requires ideas that benefit various stakeholders and functions within the organization. This will not only eliminate the siloed nature of data and decision sciences today but also help teams understand the nature and complexity of the problems, as well as the effort required to solve them. This is the key to scaling, and making sure everyone in the organization is aligned on the value that data science brings.

We’re already seeing some of these examples at play – for example, Scribble Data’s Enrich feature store was used at Mars, a Fortune 100 company by their People Analytics team to improve the way a number of key decisions were taken. Data scientists, business managers, and HR specialists worked together to improve the employee experience, incentive alignment, and churn modeling to help retain the best talent within the company. These impacted multiple areas such as hiring, performance management, and employee engagement, all of which fall in the top right corner of the urgent/important matrix, given the Great Resignation.

Data Science, by its very nature, is evolving and uncertain project – almost like a university project where all you have is either a fuzzy idea of the goal, or a clearly defined goal that doesn’t offer much value to the business. It’s important to think of clear, realistic ideas along with key stakeholders to define the priorities for the data science team in order to see more success in projects, and buy-in from the leadership team.

The Machine Learning Stack Should be Fit for Purpose

Every year, billions of dollars are spent on data science tools which are overengineered and don’t serve the purpose of 90% of the organizations or their use cases. As a result, the incremental cost of complexity is much more than the incremental value of these tools. Here are a few points to consider when you’re building your data stack:

- Fit business constraints: Make your tooling investment decisions based on their expected outcome, which can be measured on a regular basis

- Right complexity: Identify tools that are fit for purpose, which will not only make the lives of the people using them easier but will also make data deeply relevant to individuals in the value chain

- Take things slow: Avoid making decisions based on what’s new and trending, or would look good on paper. For example, an engineer we recently interviewed discussed how his former organization spent an inordinate amount of resources building real-time infrastructure whereas it provided only the smallest uptake, and was applicable to only 1% of their use cases.

Most importantly, the goal of the data stack is to eliminate data silos within the organization, which is why they all need to fit together. Better integration between these tools will make the data stack more verticalized and fit for purpose, offering significantly more value to organizations and stakeholders.

This article, written by Venkata Pingali, originally appeared in Hackernoon.