Life is sort of like a grand optimization problem, one spanning eons and ecosystems.

The players? Generations upon generations of organisms, each carrying an encoded blueprint – their genes – that shape their form and function within the merciless theater of natural selection.

Those best adapted to their circumstances thrive and propagate, passing on the most successful portions of their genetic code. Those ill-equipped steadily fade into extinction’s oblivion. It is an endless march towards perfection, one evolutionary step at a time.

This primordial dance has inspired a pioneering approach to artificial intelligence. a technique that harnesses the raw power of Darwinian principles to bend machines to our will. They are genetic algorithms, an innovative twist on biological evolution’s ancient wisdom.

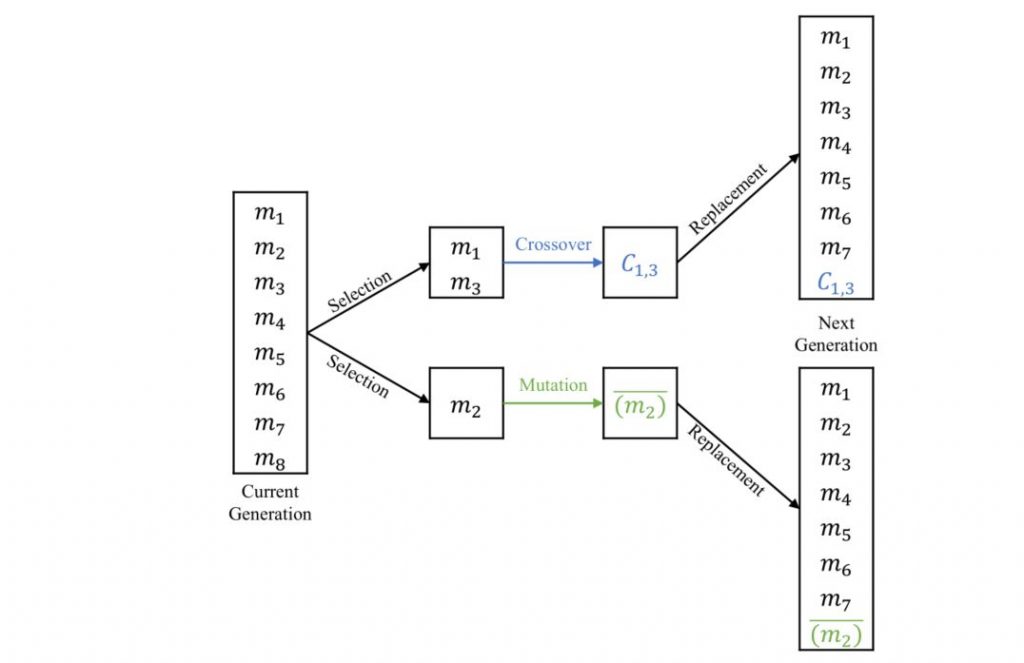

Within the silicon minds of modern computing systems, entire populations of candidate solutions are created, encoded as digital chromosomes. Through cycles of assessment and selective ‘breeding’, the fittest rise to the fore while the ill-adapted are culled. Genetic operators like crossover and mutation foster the exploration of new solution landscapes.

It is a turbocharged version of evolution’s proven recipe, one able to rapidly sculpt near-optimal problem solvers from the raw material of randomized initial guesses. From training the neural networks poised to master our world’s complexities to uncovering the ideal robot control laws, genetic algorithms have etched their footprint across AI’s frontiers.

As we dive into this algorithmic frontier, we will shed light on its inner workings, real-world applications, and the ingenious innovations that have turbocharged its worldly evolution.

Fundamental Principles of Genetic AI Algorithms

Genetic algorithms take a page from nature’s book of evolution. Specifically:

- Natural Selection: In nature, only the fittest organisms survive and reproduce. Similarly, these algorithms prioritize and select only the best candidate solutions to contribute to the next iteration.

- Genetic Variation: Biological offspring get a reshuffled mix of genes from parents, plus some chance mutations. Genetic algorithms create new solutions by combining parts of high-quality ones, while also introducing some random changes.

- Survival of the Fittest: Nature filters out ill-adapted organisms over generations. Genetic algorithms discard poor solutions from the pool over cycles, keeping only the top candidates.

At their core, genetic algorithms have a few key parts:

- Chromosomes/Genotypes: These are just encoded representations of the possible solutions to a problem.

- Population: The entire set of current candidate solutions being evolved.

- Fitness Function: A way to score each candidate solution, indicating how good or “fit” it is.

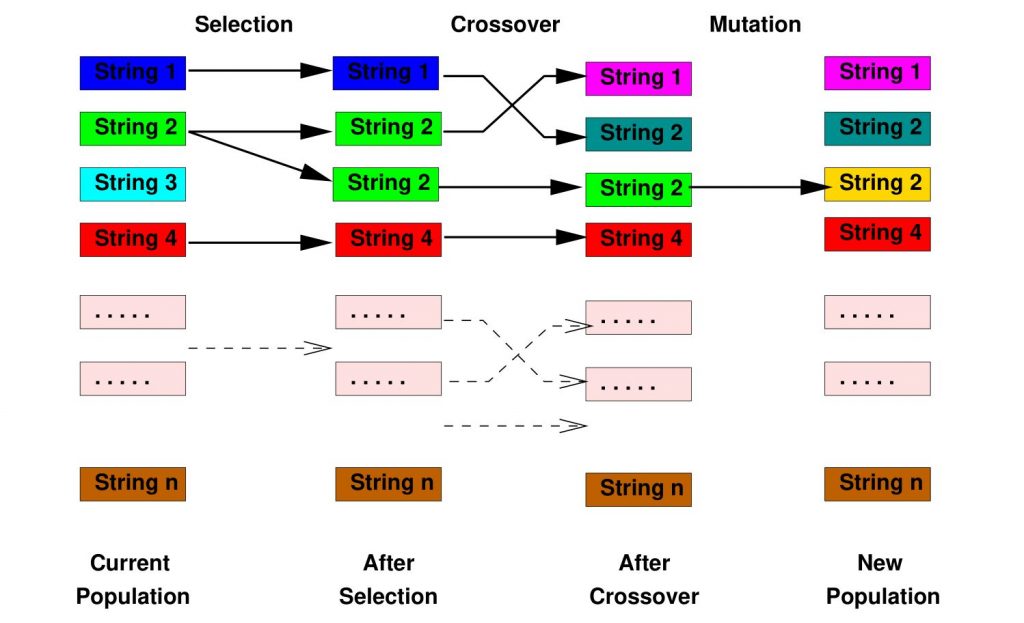

The basic iterative cycle goes like this:

- Start with a population of randomized candidate solutions.

- Evaluate and score each one using the fitness function.

- Select the highest scorers, the “fittest” solutions.

- “Breed” the selected solutions by combining parts of some, while randomly mutating others.

- This breeding creates a new population to start the cycle over.

Each cycle refines and evolves better solutions by selectively keeping and varying the most promising ones, just like how species evolve over generations in nature. The solutions incrementally improve their “fitness” over many rounds of this process.

Applications of Genetic Algorithms in AI/ML

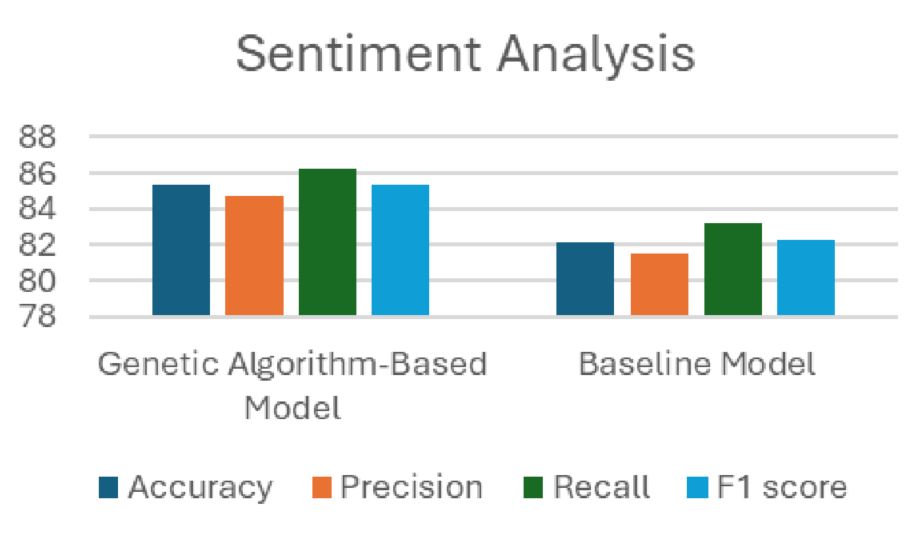

Results for Sentiment analysis showing accuracy, precision, recall, and F1 score

Genetic algorithms excel at solving complex optimization problems in AI and machine learning. They’re particularly useful for finding the best solution from a vast pool of possibilities. Here are a few examples:

- Neural Network Training: When training a neural network to recognize patterns, you need to fine-tune a huge number of internal parameters. Genetic algorithms can efficiently search through these parameter combinations to find the optimal set that maximizes the network’s performance.

- Parameter Tuning: Many AI models have settings (like learning rate or regularization strength) that need to be just right for optimal performance. Genetic algorithms can evaluate different parameter combinations and evolve towards the best set.

- Scheduling/Planning: In complex scheduling scenarios like airport flight management, genetic algorithms can help by treating each possible schedule as a “chromosome” and evolving them to find the most efficient, delay-minimizing arrangement.

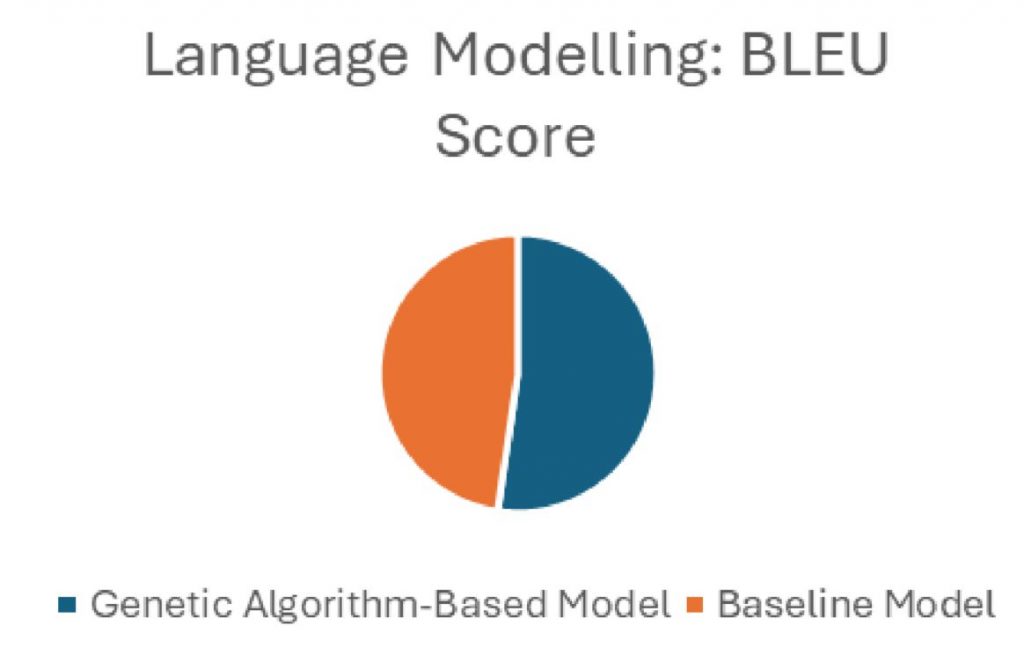

Results for Language Modelling showing the BLEU score

Genetic algorithms also assist in building and refining machine learning models:

- Feature Selection/Extraction: In machine learning, you often have a large number of input features to choose from. Genetic algorithms can help select the most informative features and even combine them in effective ways to create an optimal feature set for your model.

- Rule Induction/Extraction: Some models, like decision trees, learn by generating rules from data. Genetic algorithms can guide this process by evolving different rule sets and keeping the most accurate ones.

- Clustering: When you need to group similar data points (like dividing customers into segments based on purchasing behavior), genetic algorithms can evolve different cluster arrangements and converge on the most coherent groupings.

Genetic algorithms also prove valuable in the realm of robotics and control:

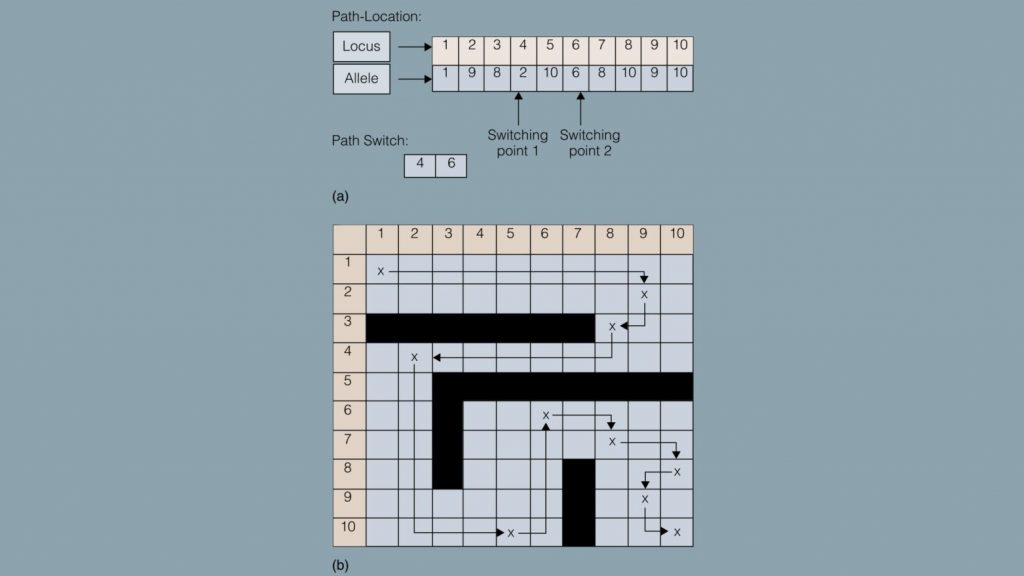

Robotic path switching using chromosome with switching points

- Path Planning: In scenarios like robotic navigation, each possible route can be treated as a “chromosome”. The algorithm evolves a population of paths, favoring those that are efficient, smooth, and collision-free. Over generations, it converges on the optimal path.

- Control Strategy Optimization: Genetic algorithms can help tune the control mechanisms that guide a robot’s actions. Each set of control parameters forms a “chromosome”. The algorithm evolves them to find the optimal configuration that enables the robot to perform its tasks effectively.

From optimizing deep learning architectures to fine-tuning industrial control systems, genetic algorithms have demonstrated their versatility across AI and ML domains. They’re a go-to tool whenever you need to efficiently search a vast solution space, guided by the principles of selection and evolution.

Genetic Algorithms: Benefits and Drawbacks

Genetic algorithms offer several significant advantages in AI and ML applications:

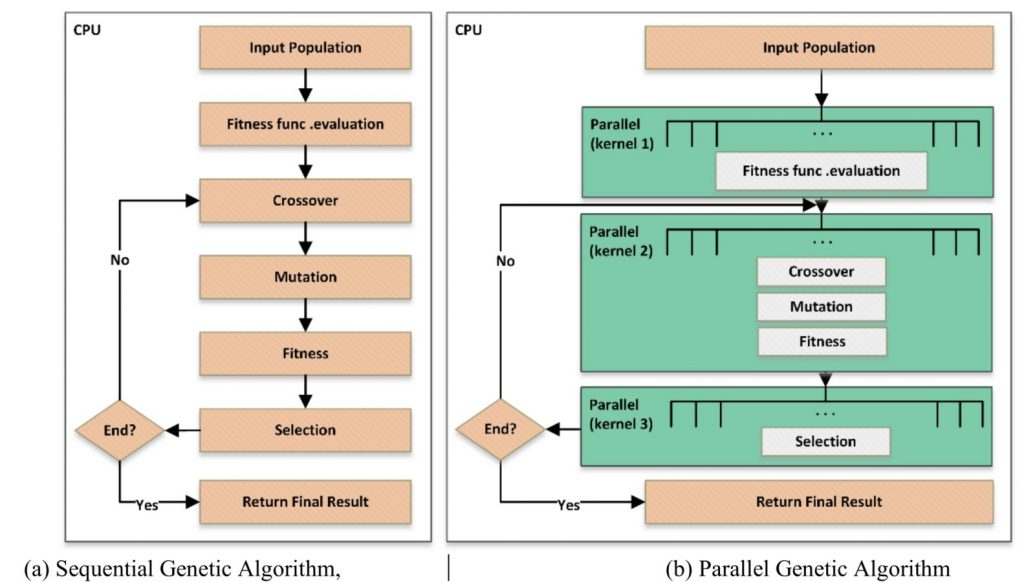

Sequential and parallel approaches for Genetic Algorithm

- Global Optimization Capability: Genetic algorithms are particularly effective at finding the global optimum in a large and complex search space. Unlike some other optimization methods that may get trapped in local optima, genetic algorithms use a population-based approach and stochastic operators to maintain diversity and explore a wide range of solutions. This global search capability makes them well-suited for problems with many local optima or non-convex solution spaces.

- Parallelization Potential: The population-based nature of genetic algorithms makes them inherently parallelizable. Each individual in the population can be evaluated independently, allowing for efficient distribution of the computational workload across multiple processors or even across a cluster of machines. This parallel processing capability can significantly reduce the overall execution time, especially for computationally intensive problems with large population sizes.

- Flexibility and Versatility: Genetic algorithms can be applied to a wide variety of optimization problems, regardless of the specific domain or the nature of the objective function. They can handle both continuous and discrete variables, as well as non-linear and non-differentiable objective functions. If the problem can be formulated in terms of a fitness function that evaluates the quality of candidate solutions, genetic algorithms can be employed to search for optimal or near-optimal solutions.

Despite their strengths, genetic algorithms also have some limitations and challenges:

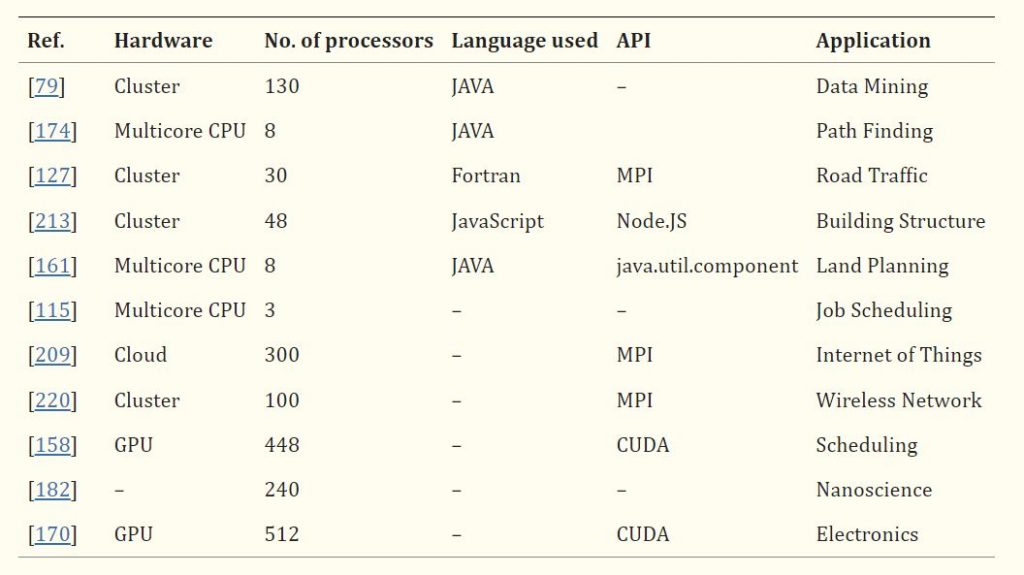

Analysis of parallel GAs in terms of hardware and software

- Computationally Expensive: The iterative nature of genetic algorithms and the need to evaluate the fitness of each individual in the population can make them computationally expensive, especially for problems with large search spaces or complex fitness evaluations. The computational cost increases with the population size and the number of generations required to converge to a satisfactory solution. This can be a significant limitation when dealing with real-time or resource-constrained applications.

- Parameter Tuning Challenges: The performance of genetic algorithms heavily depends on the choice of various parameters, such as population size, crossover rate, mutation rate, selection pressure, and termination criteria. Finding the optimal combination of these parameters can be challenging and often requires empirical tuning or even meta-optimization techniques. Improper parameter settings can lead to slow convergence, premature convergence, or poor solution quality.

- Premature Convergence Risk: Genetic algorithms can sometimes suffer from premature convergence, where the population becomes too homogeneous too quickly and gets stuck in a suboptimal solution. This can happen when the selection pressure is too high, causing the algorithm to focus too much on exploiting the current best solutions and not exploring new regions of the search space sufficiently. Premature convergence can be mitigated by techniques such as diversity preservation, niching, or adaptive parameter control.

While these disadvantages pose challenges, researchers and practitioners have developed various strategies to address them.

In the next section, we will explore some of these advanced concepts and techniques that can enhance the performance and efficiency of genetic algorithms in AI and ML applications.

Advanced Concepts

As the field of genetic algorithms in AI and ML has matured, researchers have developed several advanced concepts and techniques to enhance their performance and expand their applicability.

Hybrid Algorithms: One notable advancement is the development of hybrid algorithms that combine genetic algorithms with other optimization techniques. These hybrid approaches aim to leverage the strengths of different methods to overcome the limitations of individual algorithms.

For example, memetic algorithms integrate local search techniques into the genetic algorithm framework. After each generation, the individuals in the population undergo a local refinement phase, where they are improved using methods such as hill climbing or simulated annealing. This hybridization allows for a more thorough exploration of the solution space and can help escape local optima.

Another hybrid approach is the combination of genetic algorithms with machine learning models, such as neural networks or support vector machines. In these cases, the genetic algorithm is used to optimize the structure or parameters of the machine learning model, while the model itself is used to evaluate the fitness of the candidate solutions. This synergy can lead to more efficient and effective optimization of complex ML models.

Multi-Objective Optimization: Many real-world problems involve optimizing multiple conflicting objectives simultaneously. Genetic algorithms have been extended to handle multi-objective optimization through techniques like Pareto optimization.

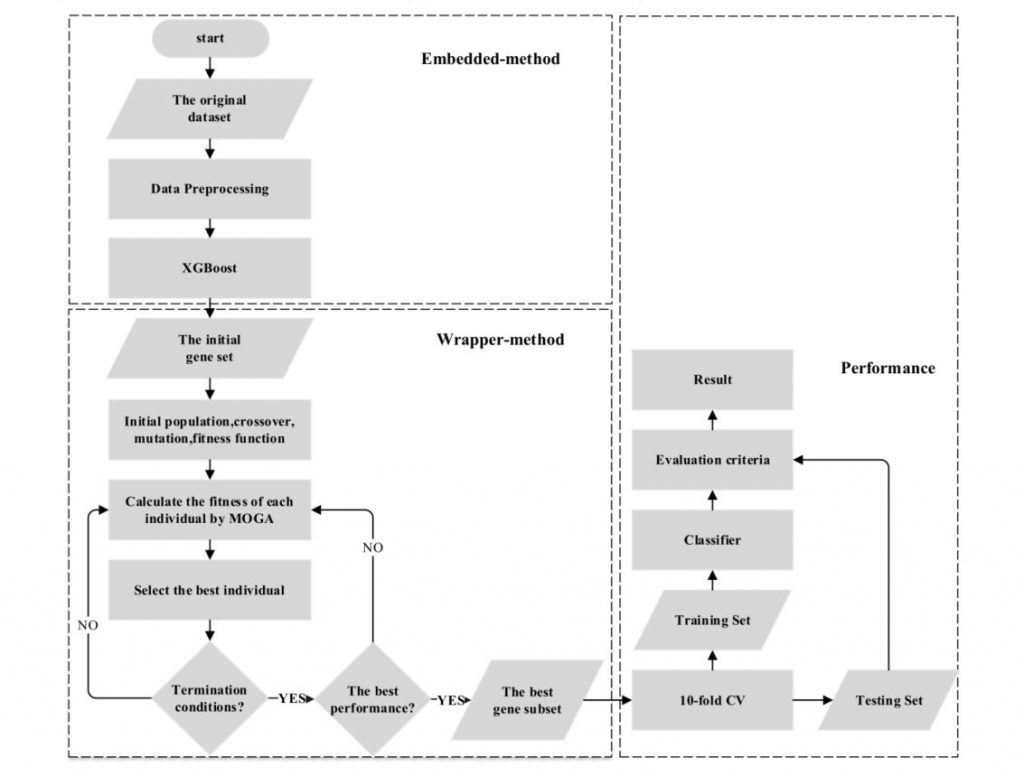

Overall process of XGBoost-MOGA approach

In multi-objective genetic algorithms (MOGAs), the fitness function is replaced by a set of objective functions that evaluate different aspects of the solution quality. The algorithm maintains a population of solutions that represent different trade-offs among the objectives, forming a Pareto front. The goal is to find a diverse set of solutions that are non-dominated, meaning no other solution is better for all objectives.

MOGAs use specialized selection and diversity preservation mechanisms to encourage the exploration of the Pareto front and maintain a well-distributed set of solutions. Some popular MOGAs include NSGA-II, SPEA2, and MOEA/D.

Adaptive and Self-Tuning Approaches: To address the challenge of parameter tuning, researchers have developed adaptive and self-tuning approaches for genetic algorithms. These methods aim to automatically adjust the algorithm’s parameters during the optimization process based on the characteristics of the problem and the progress of the search.

One approach is to use adaptive operators, where the probabilities of applying different genetic operators (such as crossover and mutation) are dynamically adjusted based on their past performance. Operators that have been more successful in generating high-quality offspring are given higher probabilities, while less effective operators are suppressed.

Another approach is to use self-adaptive parameters, where the parameters themselves are encoded into the individuals’ genomes and evolve alongside the solutions. This allows the algorithm to automatically discover optimal parameter settings for each specific problem instance.

Theoretical Developments: Alongside the practical advancements, there have been significant theoretical developments in the field of genetic algorithms. One notable example is the schema theorem, which provides a mathematical foundation for understanding how genetic algorithms work.

The schema theorem states that short, low-order schemata (building blocks) with above-average fitness tend to increase exponentially in subsequent generations. This theorem helps explain the effectiveness of genetic algorithms in efficiently exploring the search space by combining and propagating promising building blocks.

Real-world Applications of Genetic Algorithms

Genetic algorithms have been applied across various domains to solve complex optimization problems, demonstrating their versatility and effectiveness. Here’s an overview of how GAs have been utilized in different fields:

- In the realm of urban transportation, GAs have been used to produce optimal or near-optimal intersection traffic signal timing strategies, focusing on minimizing automobile delay and improving traffic flow. This application showcases the potential of GAs to enhance traffic management systems, leading to smoother traffic flow and reduced congestion.

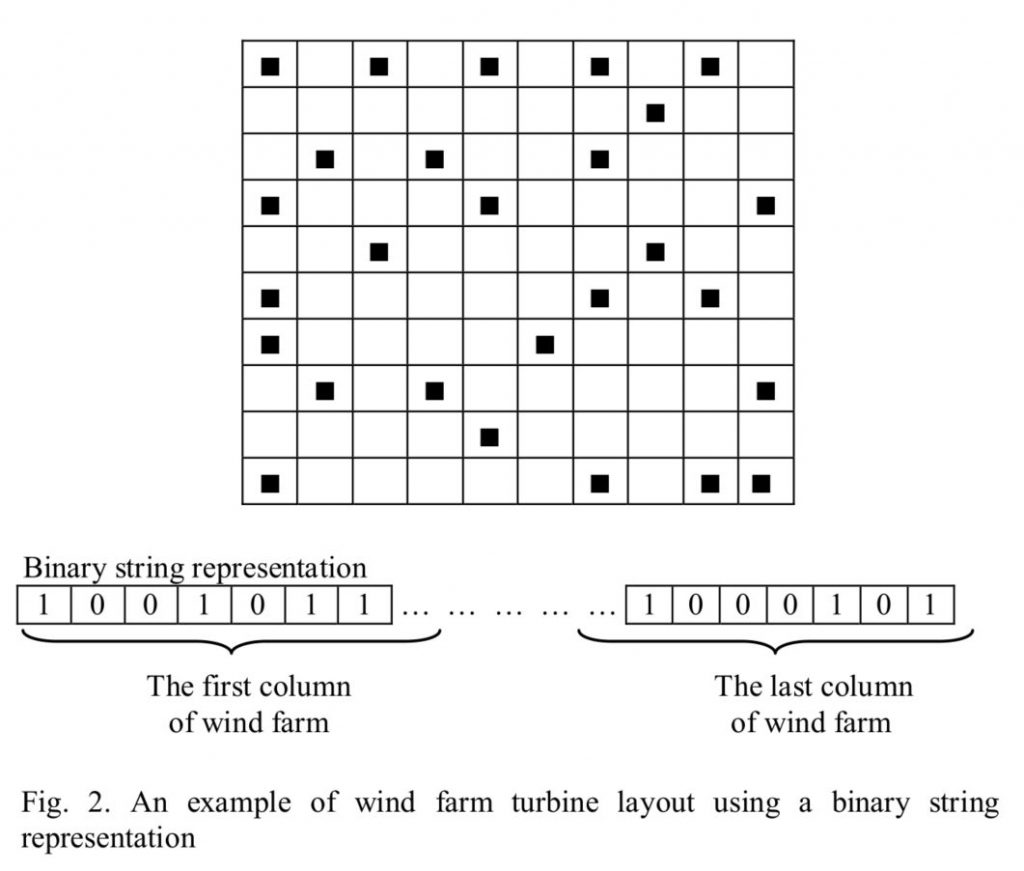

- The optimization of wind farm layouts is another area where GAs have shown significant promise. By optimizing the layout of wind farms, GAs aim to maximize energy output while minimizing wake effects and land-use constraints. This approach not only increases the efficiency and profitability of wind energy projects but also contributes to the sustainable development of renewable energy sources.

The steady-state scheme genetic algorithm for training a convolutional neural network

- In the field of artificial intelligence and machine learning, GAs have been employed to evolve the architectures of deep neural networks. This process, known as neuroevolution, involves encoding network architectures as genomes and evaluating their performance on specific tasks. GAs can evolve optimal network structures tailored to particular problems, demonstrating their capability to discover novel and efficient solutions for tasks such as image classification, reinforcement learning, and natural language processing.

These examples underscore the broad applicability and effectiveness of GAs in tackling complex optimization challenges across different domains. By leveraging the principles of natural selection and genetics, GAs provide a powerful tool for finding optimal or near-optimal solutions to problems that are otherwise difficult to solve using traditional methods.

Future Trends and Research Directions

The field of genetic algorithms in AI and ML is evolving rapidly, with several exciting trends and research directions emerging.

- Scalability and Efficiency Improvements: Researchers are focusing on improving the scalability and efficiency of genetic algorithms through parallel and distributed computing, GPU acceleration, and advanced data structures. Techniques like surrogate models and fitness approximation are being explored to reduce the computational burden.

- AI/ML Integration and Automation: The integration of genetic algorithms with deep learning, reinforcement learning, and other advanced ML techniques is a promising direction. Genetic algorithms are being used for hyperparameter tuning, automated machine learning (AutoML), and optimizing deep learning architectures.

- New Genetic Operator Innovations: Researchers are developing new genetic operators and strategies to enhance performance and adaptability. Adaptive crossover and mutation operators, co-evolutionary algorithms, and other innovations are being explored to maintain population diversity and prevent premature convergence.

- Emerging Applications: Genetic algorithms are finding applications in various domains, including generative design, evolutionary robotics, and drug discovery. These emerging areas showcase the versatility and potential of genetic algorithms to tackle complex problems.

Conclusion

Just as nature’s intricate mechanisms have shaped the diversity and resilience of living organisms, genetic algorithms have transformed the landscape of problem-solving in AI and ML. By embodying the essence of selection, variation, and survival of the fittest, these algorithms have proven their mettle in navigating the complex labyrinth of optimization challenges.

However, the journey of genetic algorithms is far from complete. The challenges that lie ahead – scalability, efficiency, and seamless integration – are not insurmountable obstacles, but rather evolutionary pressures that will drive further innovation and refinement.

As we continue to explore uncharted territories and push the limits of computational capabilities, genetic algorithms will undoubtedly play a pivotal role in shaping the future of AI and ML.