November 2022. ChatGPT drops, and the AI world shifts on its axis. Little did we know, this was the digital equivalent of splitting the atom. Fast forward to today, and Large Language Models (LLMs) have gone from party tricks to power tools faster than you can say “Hey Siri.”. But let us cut through the hype and examine what is really changing.

First off, the versatility of LLMs has skyrocketed. We are no longer talking about models confined to narrow tasks or specific domains. Today’s LLMs are digital polymaths, equally at home parsing code, analyzing market trends, or drafting legal briefs. This adaptability is pushing them into every corner of the digital ecosystem, from edge devices to cloud infrastructure.

But here is where it gets interesting: the real innovation is not just in scale but in efficiency. Model distillation techniques are allowing us to create smaller, nimbler LLMs that pack a serious punch. It is not about building bigger anymore. It is about building smarter. These streamlined models are opening up new possibilities for deployment in resource-constrained environments. Suddenly, the power of an LLM can fit in your pocket – literally.

However, let’s not kid ourselves. We are approaching the limits of our current modeling techniques. The low-hanging fruit has been picked, and the next leap forward will not come from simply scaling up. The search is on for novel approaches that can break through these barriers. Are we looking at a paradigm shift in how we conceptualize and construct these models? Time will tell.

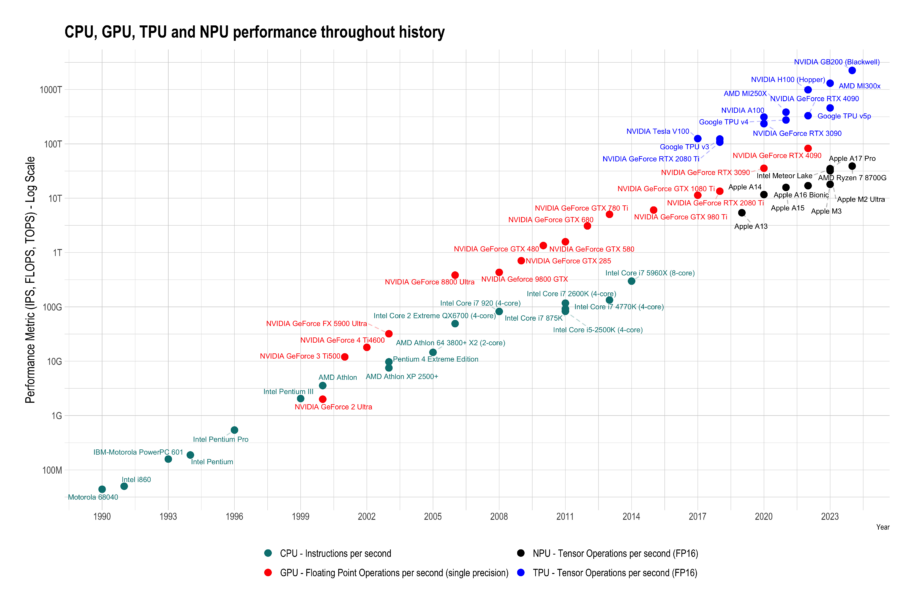

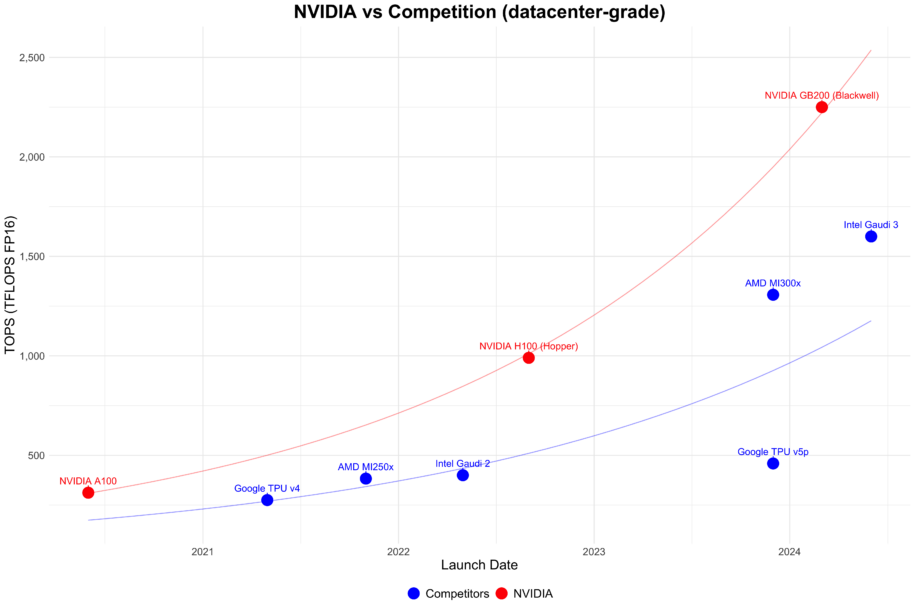

On the hardware front, we are seeing an acceleration that would make Moore blush. We’re doubling compute capacity roughly every six months (far outpacing Moore’s Law!) – a pace that’s both exhilarating and unsustainable. The hundreds of billions of dollars enterprises are investing in acquiring hardware is reshaping the tech landscape. Nvidia’s dominance is being challenged as tech giants like Google and Amazon, along with a new crop of startups, jump into the chip-making game.

But here’s the rub – all this computing firepower comes at a cost. Energy consumption and heat dissipation are not just engineering challenges anymore. They are existential threats to the ongoing expansion of AI capabilities. We are beyond just pushing the boundaries of computing – we’re testing the limits of our power grids and real estate markets. The next big AI breakthrough might come from a power management innovation rather than a clever new algorithm.

As we stand at this inflection point, one thing is clear: LLMs are no longer optional. They are becoming as fundamental to modern computing as databases or the internet itself. The question is not whether they will transform your industry, but how quickly you can adapt to the new reality they are creating.

How LLMs are Reshaping the Landscape of Business

The integration of LLMs into enterprise architectures represents a paradigm shift in software development and business operations. We are witnessing a fundamental reimagining of application design, driven by the unique characteristics and capabilities of these models.

At the core of this shift lies the challenge of building deterministic systems around inherently probabilistic components. LLMs introduce an element of unpredictability at every step of the process, forcing developers to rethink traditional approaches to error handling, testing, and quality assurance. Systems must now be designed with the flexibility to handle the unexpected, often creative, outputs of LLMs, going beyond planning for known edge cases.

This new paradigm is giving rise to novel architectural patterns. We are seeing the emergence of prompt management systems, similar to traditional database management systems, designed to version, test, and optimize prompts at scale. There is also a growing emphasis on robust feedback loops and continuous learning systems that can fine-tune and adapt LLM behaviors in production environments.

The role of prompt engineering has evolved into a critical engineering discipline. As the complexity of LLM-powered systems grows, we’re seeing the rise of specialized roles like “AI Interaction Designers” and “Prompt Optimization Engineers.”

Recently, a small team within Amazon migrated 30,000 production applications from older Java versions to Java 17. This comprehensive re-engineering effort leveraged LLMs to refactor, optimize, and in some cases completely reimagine legacy systems. The team saved over 4500 developer years in manual labor and $260 million in annual savings.

However, the path to successful LLM integration is far from straightforward. Many early adopters struggled to realize ROI, often due to a misalignment between LLM capabilities and business objectives. The key lesson emerging from these experiences is the need for a nuanced understanding of LLM strengths and limitations.

One of the most significant impacts of LLMs is their ability to bridge the longstanding divide between structured and unstructured data. By providing a unified interface to interact with both databases and document repositories, LLMs are enabling new forms of knowledge discovery and decision support. This capability is particularly powerful in domains like legal research, scientific literature review, and market intelligence, where insights often lie at the intersection of structured data and unstructured text.

Moreover, the integration of LLMs is raising complex questions about accountability and governance. As these models take on more critical roles in decision-making processes, organizations are grappling with issues of explainability, bias mitigation, and regulatory compliance.

What does the LLM-powered future look like?

The rapid evolution we have witnessed since ChatGPT’s debut has set a blistering pace, but what lies ahead may redefine our very conception of technological progress.

Expect a deluge of LLM application deployments and success announcements. Organizations that have been quietly experimenting and developing over the past year are poised to unveil their AI-driven innovations. These will not be mere proof-of-concepts or flashy demos. We are talking about robust, production-ready systems that deliver tangible business value. The era of AI as a speculative venture is behind us. We are entering a phase where LLMs are becoming core components of enterprise architecture.

Private LLM deployments are set to surge. The barriers to entry for hosting powerful language models within corporate firewalls are crumbling. What was once a Herculean task, fraught with technical challenges and prohibitive costs, is becoming increasingly accessible. Expect to see a proliferation of industry-specific and company-tailored models, fine-tuned on proprietary data and optimized for niche applications. This democratization of AI capabilities will level the playing field, allowing smaller players to compete with tech giants in terms of AI-driven innovation.

However, we may be approaching a plateau in raw LLM performance improvements. The next iteration of GPT (let’s call it GPT-5 for argument’s sake) might offer more incremental advancements rather than the quantum leaps we’ve grown accustomed to. This is not a sign of stagnation, but rather a shift in focus. The frontier of innovation is moving from model size and parameter count to more nuanced aspects of AI development.

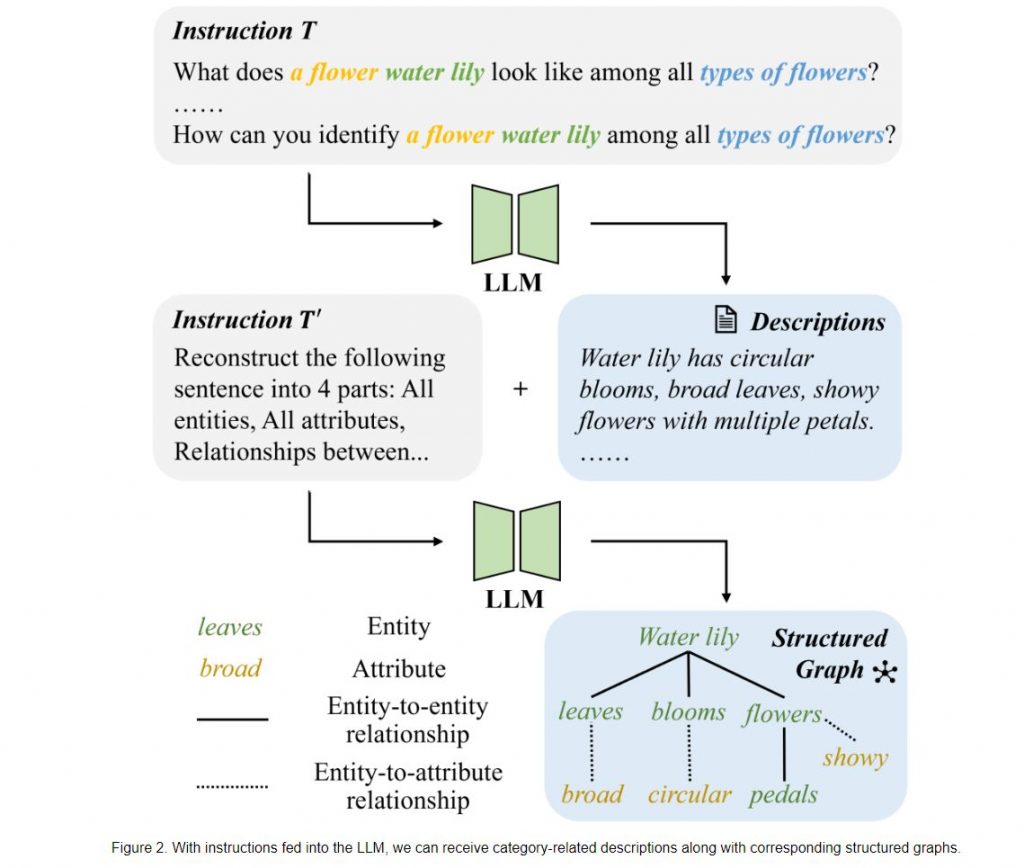

As a result, we are likely to see a rise in parallel techniques used in conjunction with LLMs. The integration of symbolic AI, knowledge graphs, and other AI paradigms with neural language models could unlock new capabilities and address some of the persistent challenges in areas like reasoning, factuality, and domain-specific expertise. This hybrid approach may well be the key to pushing beyond the current limitations of pure neural network-based systems.

The emergence of hyper-efficient small teams and one-person companies, supercharged by AI, will challenge traditional notions of organizational structure and productivity. A single developer armed with the right AI tools might soon be able to match the output of an entire team from just a few years ago. This shift will force us to reconsider fundamental assumptions about workforce composition, project management, and even the nature of work itself.

The competitive landscape is also in flux. Established players like OpenAI may find their dominance challenged as new entrants and existing tech giants pour resources into AI development. The next breakthrough could come from an unexpected quarter, reshaping the industry overnight.

Perhaps the most profound shift we will witness is the reimagining of organizational structures around AI capabilities. This goes beyond simple efficiency gains or automation. We are talking about fundamentally new ways of conceptualizing business processes, decision-making frameworks, and value creation.

The next 12 months promise to be a period of intense innovation, unexpected breakthroughs, and profound transformations.

Conclusion – Adapt or Fade Away

The imperative for businesses to rewire themselves around AI capabilities has never been more urgent. This rewiring goes beyond the superficial integration of AI tools. It demands a fundamental reevaluation of organizational structures, business processes, and even the very nature of value creation. Companies that view LLMs as mere productivity enhancers are missing the forest for the trees. These technologies have the potential to redefine entire industries, creating new markets and obliterating old ones.

In a mere 18 months, we have seen LLMs evolve from curiosities to necessities. The next 18 months promise an even more dramatic transformation. The question is not whether your industry will be affected, but how quickly you can position yourself to ride this wave of innovation.