The world of data, and data infrastructure, has changed dramatically over the past decade. Traditional databases, which were designed to store information in a structured format, have evolved into massive warehouses of unstructured data that sit on multiple servers across different locations. Not too long ago, we were used to seeing monolithic systems dominated by behemoths, the likes of Oracle and IBM. If you are an analyst or business user who needs access to this type of data—and who doesn’t?—it meant slow moving systems that were incredibly difficult to manage.

The birth of a new data infrastructure software stack

The increasing complexity of data infrastructure eventually drove the need for modern software stacks that could help organizations run complex applications while managing to stay cost effective. The open source movement helped with this, by dramatically decreasing the cost of putting complex applications together such as Elastic Search for full text search and PyTorch for modeling. Robust packaging and operations of the software improved usability, stability, and economics of the system.

The Modern Data Stack (MDS), which has seen a lot of traction over the past decade, builds on the open source movement and is a collection of ideas, tools and methodologies intended to build the enterprise data stack.

Challenges in scaling MDS

In the 2010s, we saw rapid adoption of open source tools within the MDS. However, post their initial success a lot of organizations’ initiatives around these ran into challenges when it came to scaling them:

-

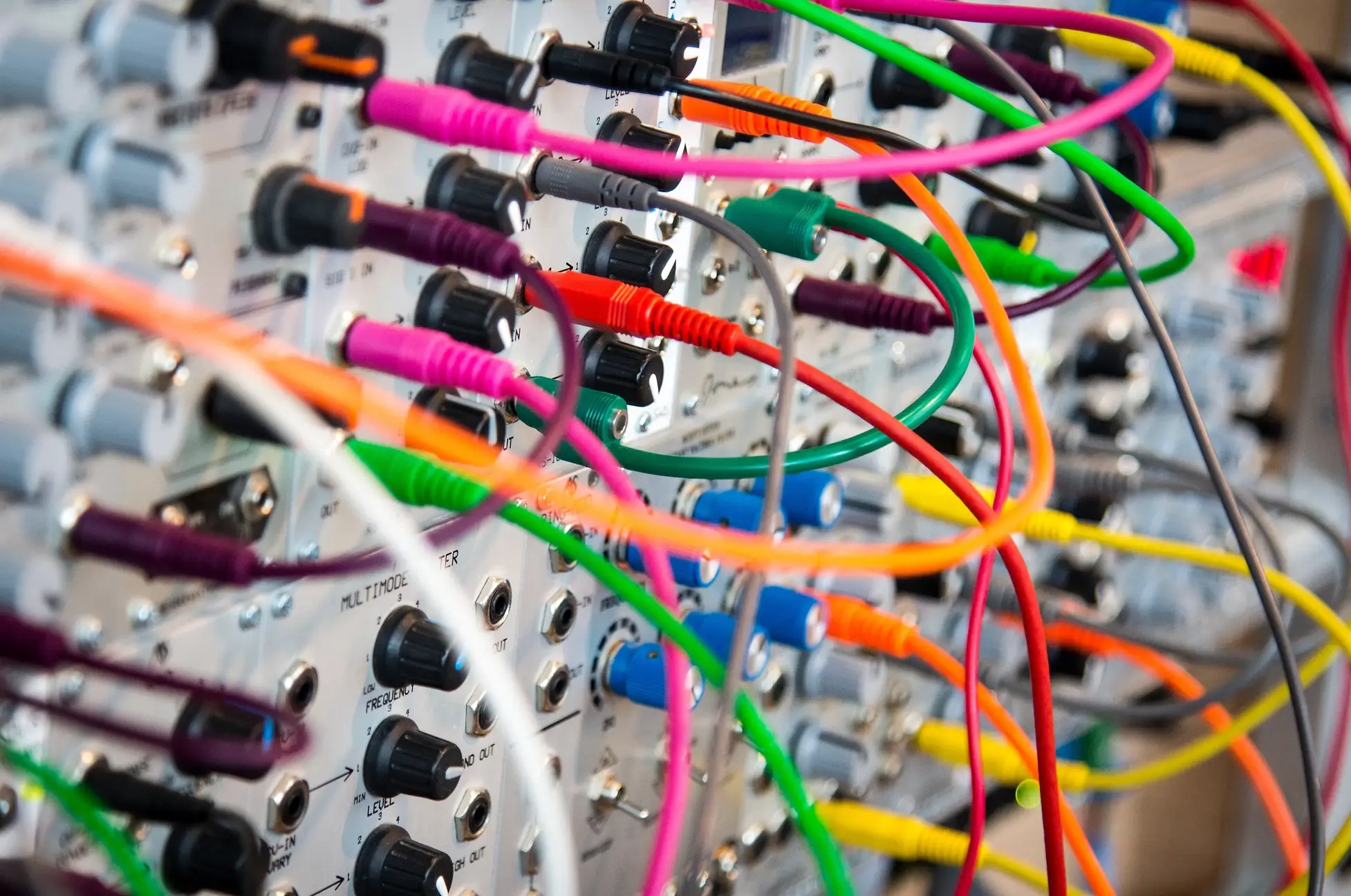

The cognitive overload due to the number of tools, configurations, methodologies, and interactions that organizations and teams had to keep up with was overwhelming, leading to burnout and high attrition rates among talent

-

The learning curve associated with these technologies was incredibly steep. One has to understand that most of these open source tools were built at sophisticated organizations such as Netflix, Google, and Uber and don’t necessarily suit the needs of organizations that have smaller deployments–a fraction of the scale.

-

The pace of innovation in the space also meant shorter lifespans for newer technologies. With the pace at which newer, better, faster, more efficient tools were arriving on the scene engineers had to learn and unlearn rapidly.

-

The data science community is one that has several conflicting viewpoints, resulting in a lack of clarity around what approach one needs to adopt (what’s best for their business). More often than not, the only way to overcome this challenge is by building, which is not only expensive but time-consuming.

-

If you’ve been following hype cycles such as Gartner, it’s probably no surprise for you to learn that technology investments have an end-date (which arrives much faster than it did probably a decade ago). Technologies like Hadoop, NoSQL, and Deep Learning which were considered “hot” not too long ago have already passed their peak of the Gartner hype cycle.

Points #1 and #2 have played a major role in adding to the stress levels in the industry, and also limiting the talent available for adopting and using technologies. We’ve seen a similar trend in the DevOps space, with the supply of developer talent not meeting the demand for new digital services. Tyler Jewell of Dell Capital has been quite vocal about this problem – which has been leading to high burnout, and the average career span of a professional developer being less than 20 years. He recently posted a thread where he did a deep-dive into the complexity in the developer-led landscape, and we can’t help but notice several parallels between what he claims and the MLOps space.

Points #3 and #4 highlight the plight of today’s data folks–if solving problems wasn’t enough, they end up spending more time trying to figure out “how” they can proceed and solve problems without being able to give much thought to what needs to be done, or the expected outcome.

A change is coming…

We’re seeing a shift in the data tools used by organizations, driven by an increased recognition that many of them have no choice but to rely on third party vendors for their infrastructure needs. This is not only due to budget constraints but other constraints as well, such as data security and provenance.

In addition, there’s an increased demand for automated processes that allow enterprises to easily migrate workloads from one provider onto another without disrupting operations or causing downtime. We’re seeing the effects of these within industries like financial services where data management is often critical for success (for example, credit rating agencies).

As a result of all these as well as the challenges listed above, there have been several developments in the community:

-

Organizations are increasingly emphasizing the need to build trust in their data, giving rise to tools that focus on data quality and data governance.

-

There is growing emphasis on Machine Learning and Data Science initiatives being tied to outcomes, and business models that are explicitly aligned to specific business use cases.

-

Ever increasing cost and complexity are resulting in consolidation through feature extensions, acquisitions and integrations. Snowflake, for example, is rapidly growing its list of partners to become a full analytical application stack.

-

Considering the complexity post model deployment, we’re seeing an emergence of tools such as NannyML, which help estimate model performance, detect drift, and improve models in production through iterative deployments. We’re seeing this as a way for businesses to close the loop between the business, data, and the model.

-

A new organization, AI Infrastructure Alliance, emerged to bring together the essential building blocks for Artificial Intelligence applications. They’ve been working on building a Canonical Stack for Machine Learning, which aims to drown the noise created due to the introduction of a plethora of tools that claim to be the “latest and greatest”, and help non-tech companies level up fast.

-

The definition of the MDS is being extended to include data products, apps, and other elements. This is making MDS full-stack. New products and services are emerging that slice the space based on target users (e.g. data scientists vs. analysts), skill availability, and time to outcome realization.

-

The MDS user base is expanding to include the analytics teams and business users. This is resulting in improved user-experience, low-code interfaces, and automation.

-

And finally, we’re seeing an emergence of approaches such as the “Post-Modern Stack”, which is essentially a deconstruction of the MDS and the MLOps stacks. These approaches emphasize relevance for the business as well as the downstream consumption of generated features to produce business value.

What this means

Consolidation of tools and platforms, simpler platform developments, and use of managed services is happening across the industry. This is stemming from the need for businesses to cope with complexity. It’s an exciting time to be a part of this space, and we can’t wait to see how the landscape evolves over the course of the year.

Scribble Data is keenly aware of this evolution as it is happening. We focus on one specific problem – feature engineering for advanced analytics and data science use cases. This problem space has steadily grown in terms of importance and has evolved in ways that are consistent with the above points. With the right technology mix and solution focus, we are able to align product value to use cases, while achieving 5x faster time to value (TTV) for each use case.